This post was originally published on Josetteorama.

The arrival of the iPhone changed the whole direction of software development for mobile platforms, and has had a profound impact on the hardware design of the smart phones that have followed it.

Not only do these devices know where they are, they can tell you how they're being held, they are sufficiently powerful to overlay data layers on the camera view, and record and interpret audio data, and they can do all this in real time. These are not just smart phones, these are computers that just happen to be able to make phone calls.

The arrival of the External Accessory Framework was seen, initially at least, as having the potential to open the iOS platform up to a host of external accessories and additional sensors. Sadly, little of the innovation people were expecting actually occurred, and while there are finally starting to be some interesting products arriving on the market, for the most part the External Accessory Framework is being used to support a fairly predictable range of audio and video accessories from big-name manufacturers.

|

| Alasdair Allan demonstrating an Augmented Reality application |

The reason for this lack of innovation is usually laid at the feet of Apple's Made for iPod (MFi) licensing program. To develop hardware accessories that connect to the iPod, iPhone, or iPad, you must be an MFi licensee.

Unfortunately, becoming a member of the MFi program is not as simple as signing up as an Apple Developer, and it is a fairly lengthy process. From personal experience I can confirm that the process of becoming an MFi licensee is not for the faint-hearted. And once you’re a member of the program, getting your hardware out of prototype stage and approved by Apple for distribution and sale is not necessarily a simple process.

However all that started to change with the arrival of Redpark's serial cable. As it's MFi approved for the hobbyist market it allows you to connect your iPhone to external hardware very simply, it also allows you to easily prototype new external accessories, bypassing a lot of the hurt you experience trying to do that wholly within the confines of the MFi program.

Another important part of that change was the Arduino. The Arduino, and the open hardware movement that has grown up with it and to a certain extent around it, is enabling a generation of high-tech tinkers to prototype new ideas with fairly minimal hardware knowledge.

Every so often a piece of technology can become a lever that lets people move the world, just a little bit. The Arduino is one of those levers. While it started off as a project to give artists access to embedded microprocessors for interactive design projects, I think it’s going to end up in a museum as one of the building blocks of the modern world. It allows rapid, cheap prototyping for embedded systems. It turns what used to be fairly tough hardware problems into simpler software problems.

Turning things into software problems makes things more scalable, it drastically reduces development time scales, and up front investment, and as the whole dot com revolution has shown, it leads to innovation. Every interesting hardware prototype to come along seems to boast that it is Arduino-compatible, or just plain built on top of an Arduino.

I think the next round of innovation is going to take Silicon Valley, and the rest of us, back to its roots, and that's hardware. If you're a software person the things that are open and the things that are closed are changing. The skills needed to work with the technology are changing as well.

Every so often a piece of technology can become a lever that lets people move the world, just a little bit. The Arduino is one of those levers. While it started off as a project to give artists access to embedded microprocessors for interactive design projects, I think it’s going to end up in a museum as one of the building blocks of the modern world. It allows rapid, cheap prototyping for embedded systems. It turns what used to be fairly tough hardware problems into simpler software problems.

Turning things into software problems makes things more scalable, it drastically reduces development time scales, and up front investment, and as the whole dot com revolution has shown, it leads to innovation. Every interesting hardware prototype to come along seems to boast that it is Arduino-compatible, or just plain built on top of an Arduino.

|

| Controlling an Arduino directly from the iPad |

|

| Alasdair demonstrating an Augmented Reality application |

At the start of October I'll be running a workshop on iOS Sensors and External Hardware. It's going to be hardware hacking for iOS programmers, and an opportunity for people to get their hands dirty both the internal sensors in the phone, and with external hardware.

The workshop is intended to guide you through the start of that change, and get you hands-on with the hardware in your iPhone you've probably been ignoring until now. How to make use of the on-board sensors and combine them to build sophisticated location aware applications. But also how to extend the reach of these sensors by connecting your iOS device to external hardware.

We'll look at three micro-controller platforms, the Arduino and the BeagleBone and Raspberry Pi, and get our hands dirty building simple applications to control the boards and gather measurements from sensors connected to it, directly from the iPhone. The course should give you the background to build your own applications independently, using the hottest location-aware technology yet for any mobile platform.

|

| Blinking the heartbeat LED of a BeagleBone from the iPhone |

The workshop will be on Monday the 8th of October at the Hoxton Hotel in London at the heart of Tech City, and right next to Silicon Roundabout. I'm extending a discount to readers; 10% off the ticket price with discount code OREILLY10. That makes the early bird ticket price just £449.10 (was £499), or if you miss the early bird deadline (the 1st of September) a full priced ticket still only £629.10 (£699).

Monday 8th October 2012

Hoxton Hotel, London

Early Bird Price: £499 (until 1st Sept.)

Normal Price: £699

Save 10% with code OREILLY10

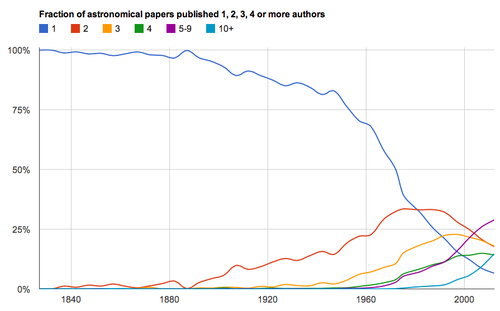

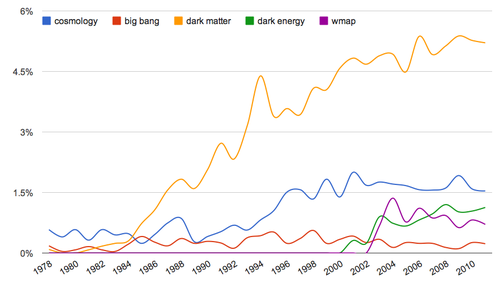

Fraction of astronomical papers published with one, two, three, four or more authors. CREDIT:

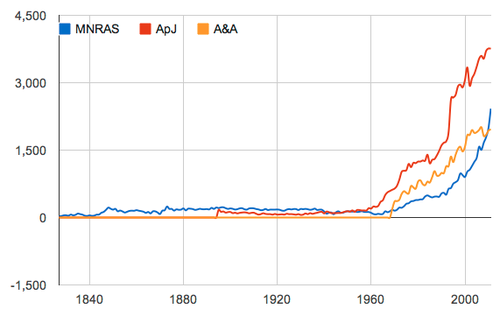

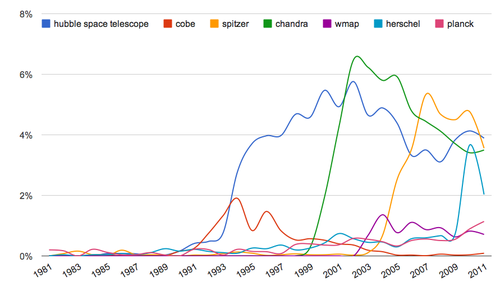

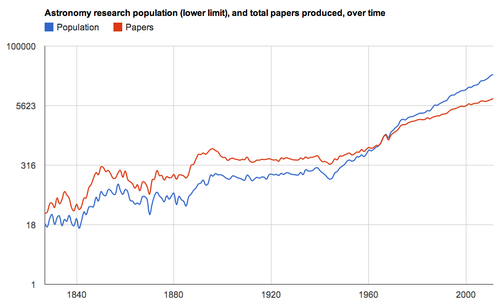

Fraction of astronomical papers published with one, two, three, four or more authors. CREDIT:  Compare the number of "active" research astronomers to the number of papers published each year (across all the major journals). CREDIT:

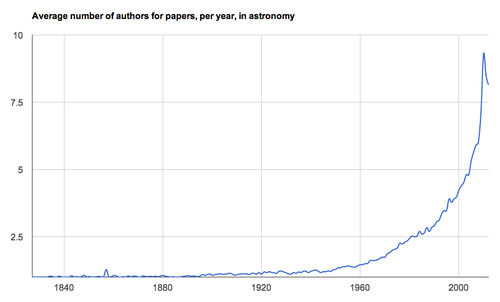

Compare the number of "active" research astronomers to the number of papers published each year (across all the major journals). CREDIT:  The above plot shows the average number of authors, per paper since 1827. CREDIT:

The above plot shows the average number of authors, per paper since 1827. CREDIT:

The SpaceX Dragon spacecraft on the end of the Canadarm2.

The SpaceX Dragon spacecraft on the end of the Canadarm2.

The Bigelow

The Bigelow  SpaceShipTwo flying with crew for the first time, during a dress rehearsal flight for its first free glide flight in 2010. Credit: Virgin Galactic/Scaled Composities

SpaceShipTwo flying with crew for the first time, during a dress rehearsal flight for its first free glide flight in 2010. Credit: Virgin Galactic/Scaled Composities Artist's rendition of a Dragon spacecraft using its SuperDraco thrusters to land on Mars. Credit: SpaceX.

Artist's rendition of a Dragon spacecraft using its SuperDraco thrusters to land on Mars. Credit: SpaceX.